Installation¶

The packages included in Helmoro support ROS Noetic on Ubuntu 20.04.

-

Install ROS Noetic on Helmoros Nvidia Jetson and on your local machine.

-

Create a ROS Workspace

sudo apt install python3-catkin-tools mkdir -p ~/catkin_ws/src cd ~/catkin_ws/ catkin build source devel/setup.bashEither you must run the above source command each time you open a new terminal window or add it to your .bashrc file as follows

-

Clone the repository into your catkin workspace. The commands are stated in the following.

After that, clone the required repositories and make the required installations listed in the next section Dependencies.

Dependencies¶

In the following, the packages and stacks which are required to run the Helmoro are mentioned. Do not forget to build the acquired packages once you cloned them into your workspace.

Overview¶

- catkin_simple

- any_node:

- message_logger

- any_node

- joystick_drivers stack

- helmoro_rplidar

- ros_astra_camera_helmoro

- ros_imu_bno055

- Navigation Stack

- gmapping

- explore_lite

Clone or download all the required repositories or stacks all together with the following commands

sudo apt-get install ros-noetic-joy

cd ~/catkin_ws/src

git clone https://github.com/catkin/catkin_simple.git

git clone https://github.com/ANYbotics/message_logger.git

git clone https://github.com/ANYbotics/any_node.git

git clone https://github.com/Helbling-Technik/Helmoro_RPLidar

git clone --branch Helmoro_2.0 https://github.com/Helbling-Technik/ros_astra_camera_helmoro

sudo apt install ros-noetic-rgbd-launch

git clone https://github.com/dheera/ros-imu-bno055.git

sudo apt install ros-noetic-libuvc-camera ros-noetic-libuvc-ros ros-noetic-navigation ros-noetic-slam-gmapping

git clone https://github.com/hrnr/m-explore.git

cd ~/catkin_ws/

You should now be able to build all the installed packages with the followiung command

In order to build and run the object_detector and the hand_detector, you will need to install additional OpenCV libraries. Please see 5.11 OpenCV for details.

catkin_simple¶

catkin_simple is a package, that simplifies the task of writing CMakeLists.txt for a package. It is used in several packages and therefore required in order for them to be built properly using catkin build.

any_node¶

any_node is a set of wrapper packages to handle multi-threaded ROS nodes. Clone or download the following repositories into your catkin workpace:

joystic_drivers stack¶

This stack allows a joystick to communicate with ROS. Note that the Helmoro packages work exclusively with the Logitech Wireless joystick F710controller. Additionally to the installation, it is useful to install the joystick testing and configuration tool

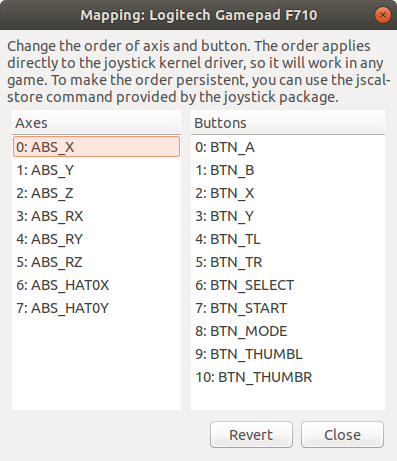

You can test the connection of your joystick by running jstest-gtk from terminal. Please check the device name (Helmoro packages use default joystick name "js0"). Also ensure that the mapping of the joystick is according to this screenshot:

Ensure that you save the mapping for next time.

If your joystick has a different name eighter overwrite this value or pass your joystick name as an argument when launching the helmoro.launch file, which is explained in the following. To test the joystick functionality with ros run the following commands in two separate terminals:

If you listen to the topic /joy while using the joystick you should see the commands being published to the corresponding topic.

helmoro_rplidar¶

This package allows a Slamtec RPLidar to communicate with ROS. The repository has been derived from the official rplidar_ros. However, a small change in node.cpp had to be made for compatibility with the Helmoro and especially with the Navigation Stack.

The change that has been made can be found on line 61 of src/Node.cpp which now says:

Instead of previously:

Clone the helmoro_rplidar repository into your workspace. This will create a package, still called rplidar_ros

Before you can run the rplidar, check the authority of its serial port.

To add the authority to write to it:

In order to fix the rplidar port and remap it to /dev/rplidar input the following command to your terminal

Once you have remapped the rplidar USB port, change the rplidar launch files about the serial_port value.

You can run the rplidar as a standalone by typing the following command into your terminal:

For further information, head to:

ros_astra_camera¶

This package allows a Orbbec Astra RGB-D camera to communicate with ROS. Through it, images and pointclouds coming from the camera as well as transformations between the different frames are published as topics.

Clone the ros_astra_camera_helmoro repository into your workspace, switch to the branch Helmoro_2.0 and install its dependencies by entering the following command into your terminal and replace ROS_DISTRO by the ROS distribution you are currently using (in this case melodic):

sudo apt install ros-$ROS_DISTRO-rgbd-launch ros-$ROS_DISTRO-libuvc ros-$ROS_DISTRO-libuvc-camera ros-$ROS_DISTRO-libuvc-ros

You can run the astra camera as a standalone by typing the following command into your terminal:

We had to fork the normal ros_astra_camera repository, as we needed to change some small values in the tf of the camera.

ros_imu_bno055¶

This repository provides a node that lets the BNO055 IMU, which is built into Helmoro, publish its fused as well as its raw data over ROS via i2c.

Clone the ros_imu_bno055 repository into your workspace.

In order to get the imu_bno055 package to work, first check if the IMU shows up in the i2c-ports.

Furthermore, check you can run

You should be able to see your device at address 0x28, which is the default address of the IMU BNO055.

If everything works, you can run your IMU by simply launching:

gmapping¶

Gmapping is a SLAM algorithm that can be used for the task of mapping an environment using a Lidar and the robot's odometry information.

Gmapping can be installed using the following command:

For more details about SLAM gmapping, head to section Slam using Gmapping

Navigation Stack¶

The navigation stack allows Helmoro to navigate autonomously by using the sensor data of the rplidar, astra camera and odometry.

Install the navigation stack by typing the following command into your terminal:

For more details about SLAM gmapping, head to section Autonomous Navigation using the Navigation Stack

explore_lite¶

In order to let Helmoro map its environment autonomously, you can make use of the explore_lite package.

Clone the explore_lite repository into your workspace.

For more details about explore_lite, head to section Autonomous Slam using explore_lite

OpenCV¶

Background¶

The Jetson Nano's default software repository contains a pre-compiled OpenCV 4.1 library (can be installed using sudo apt install ...).

The pre-compiled ROS tools all use OpenCV 3.2 (if ROS is installed using sudo apt install ...). If a custom ROS node uses OpenCV, it will use OpenCV 4.1 when being compiled, and is thus not compatible with other ROS tools/nodes (you might be lucky, but the object_detector uses incompatible OpenCV functions). It is thus required to install OpenCV 3.2 on the Jetson Nano, and since no pre-compiled library of OpenCV 3.2 for the Jetson Nano exists, this must be done from source.

Google's mediapipe uses OpenCV 4, and it is straightforward to compile the hand_detector node. However, GPU support is not enabled in the pre-compiled OpenCV 4.1 library. Hence, the OpenCV 4.1 library must also be installed from source if the hand_detector node should run on the GPU (recommended).

Installing OpenCV 3.2 or 4¶

- Create a temporary directory, and switch to it:

- Download the sources for OpenCV 3.2 or OpenCV 4 (any version > 4 should work) into

~/opencv_build. You will need bothopencvandopencv_contribpackages. The source files can be downloaded under the following links: opencv, opencv_contrib. -

Make a temporary build directory and unzip the folders in your build directory. The folder structure should look like this:

-

Create a build directory, and switch to it:

-

Set up the OpenCV build with CMake. For a basic installation:

cmake -D CMAKE_BUILD_TYPE=RELEASE \ -D CMAKE_INSTALL_PREFIX=/usr/local \ -D INSTALL_C_EXAMPLES=ON \ -D INSTALL_PYTHON_EXAMPLES=ON \ -D OPENCV_GENERATE_PKGCONFIG=ON \ -D OPENCV_EXTRA_MODULES_PATH=~/opencv_build/opencv_contrib/modules \ -D BUILD_EXAMPLES=ON ..To configure your OpenCV build more easily, install a CMake GUI,

sudo apt install cmake-qt-guiorsudo apt install cmake-curses-guiand run it withcmake-gui. To run the hand_detector on GPU, which is based on Google's mediapipe, you need to configure your OpenCV build to support CUDA/GPU. -

Start the compilation process:

Modify the

-jaccording to the number of cores of your processor. If you don't know the number of cores, typenprocin your terminal.The compilation will take a lot of time. Go grab a coffee and watch some classic youtube videos.

-

To verify whether OpenCV has been installed successfully, type the following command.

(adjust the command for

opencv3,opencv2, ...).